Until now I used vector.dev as my workhorse to collect logs and metrics from systems and deliver them to SigLens. In the mean time there is a new kid on the block: Grafana Alloy. In this article I will show you how you connect Grafana Alloy to your SigLens instance.

Introduction

In the past I showed you how you get logs and metrics into SigLens. For this purpose I used vector.dev which is a really nice and convinient component. But in the meantime there is a new kid on the block: Grafana Alloy. Some days ago I saw an quick setup from Christian Lempa; it is worth to be seen:

Overview

Grafana Alloy has more or less the same concept as vector.dev: You define sources, filters/transformers and destinations. Sounds similar, right? The difference is the configuration file approach where vector.dev uses a yaml file and Grafana Alloy some application specific format.

In this scenario the backend - SigLens - is still the same. If you are not familiar with SigLens see my other posts to get more details about it.

Grafana Alloy

I deploy Grafana Alloy as a docker container on my system. Due I manage my systems with ansible the corresponding docker “task” looks like following:

- name: Create/Start Grafana Alloy (Log Collector)

docker_container:

name: alloy

image: grafana/alloy:latest

hostname: dmz24

pull: true

state: started

restart: yes

restart_policy: always

detach: yes

volumes:

- "/data/alloy/config/config.alloy:/etc/alloy/config.alloy:ro"

- "/data/alloy/data:/var/lib/alloy/data"

- "/var/run/docker.sock:/var/run/docker.sock"

- "/:/rootfs:ro"

- "/run:/run:ro"

- "/var/log:/var/log:ro"

- "/sys:/sys:ro"

- "/var/lib/docker/:/var/lib/docker/:ro"

- "/run/udev/data:/run/udev/data:ro"

command:

- "run"

- "--server.http.listen-addr=0.0.0.0:12345"

- "--storage.path=/var/lib/alloy/data"

- "/etc/alloy/config.alloy"

labels:

com.centurylinklabs.watchtower.enable: "true"

env:

TZ: "Europe/Zurich"

The docker section is adapted from Christian Lempa’s Boilerplate, compose.yml .

Configuration

Also the configuration is basically the same as in the example from Christian’s Boilerplate, config.alloy .

The only changed part is the section “TARGETS” which has to be adapted for SigLens:

loki.write "default" {

endpoint {

url = "http://<your SigLens instance>:8081/loki/api/v1/push"

}

external_labels = {}

}

prometheus.remote_write "default" {

endpoint {

url = "http://<your SigLens instance>:8081/promql/api/v1/write"

}

}

Please note the Url’s for the endpoint definition:

- Logs:

http://<your SigLens instance>:8081/loki/api/v1/push(instead ofhttp://loki:3100/loki/api/v1/push) - Prometheus Metrics:

http://<your SigLens instance>:8081/promql/api/v1/write(instead ofhttp://prometheus:9090/api/v1/write)

For reference here the complete configuration file:

/* Grafana Alloy Configuration Examples

* ---

* LINK: For more details, visit https://github.com/grafana/alloy-scenarios

*/

// SECTION: TARGETS

loki.write "default" {

endpoint {

url = "http://<your SigLens instance>:8081/loki/api/v1/push"

}

external_labels = {}

}

prometheus.remote_write "default" {

endpoint {

url = "http://<your SigLens instance>:8081/promql/api/v1/write"

}

}

// !SECTION

// SECTION: SYSTEM LOGS & JOURNAL

loki.source.journal "journal" {

max_age = "24h0m0s"

relabel_rules = discovery.relabel.journal.rules

forward_to = [loki.write.default.receiver]

labels = {component = string.format("%s-journal", constants.hostname)}

// NOTE: This is important to fix https://github.com/grafana/alloy/issues/924

path = "/var/log/journal"

}

local.file_match "system" {

path_targets = [{

__address__ = "localhost",

__path__ = "/var/log/{syslog,messages,*.log}",

instance = constants.hostname,

job = string.format("%s-logs", constants.hostname),

}]

}

discovery.relabel "journal" {

targets = []

rule {

source_labels = ["__journal__systemd_unit"]

target_label = "unit"

}

rule {

source_labels = ["__journal__boot_id"]

target_label = "boot_id"

}

rule {

source_labels = ["__journal__transport"]

target_label = "transport"

}

rule {

source_labels = ["__journal_priority_keyword"]

target_label = "level"

}

}

loki.source.file "system" {

targets = local.file_match.system.targets

forward_to = [loki.write.default.receiver]

}

// !SECTION

// SECTION: SYSTEM METRICS

discovery.relabel "metrics" {

targets = prometheus.exporter.unix.metrics.targets

rule {

target_label = "instance"

replacement = constants.hostname

}

rule {

target_label = "job"

replacement = string.format("%s-metrics", constants.hostname)

}

}

prometheus.exporter.unix "metrics" {

disable_collectors = ["ipvs", "btrfs", "infiniband", "xfs", "zfs"]

enable_collectors = ["meminfo"]

filesystem {

fs_types_exclude = "^(autofs|binfmt_misc|bpf|cgroup2?|configfs|debugfs|devpts|devtmpfs|tmpfs|fusectl|hugetlbfs|iso9660|mqueue|nsfs|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|selinuxfs|squashfs|sysfs|tracefs)$"

mount_points_exclude = "^/(dev|proc|run/credentials/.+|sys|var/lib/docker/.+)($|/)"

mount_timeout = "5s"

}

netclass {

ignored_devices = "^(veth.*|cali.*|[a-f0-9]{15})$"

}

netdev {

device_exclude = "^(veth.*|cali.*|[a-f0-9]{15})$"

}

}

prometheus.scrape "metrics" {

scrape_interval = "15s"

targets = discovery.relabel.metrics.output

forward_to = [prometheus.remote_write.default.receiver]

}

// !SECTION

// SECTION: DOCKER METRICS

prometheus.exporter.cadvisor "dockermetrics" {

docker_host = "unix:///var/run/docker.sock"

storage_duration = "5m"

}

prometheus.scrape "dockermetrics" {

targets = prometheus.exporter.cadvisor.dockermetrics.targets

forward_to = [ prometheus.remote_write.default.receiver ]

scrape_interval = "10s"

}

//!SECTION

// SECTION: DOCKER LOGS

discovery.docker "dockerlogs" {

host = "unix:///var/run/docker.sock"

}

discovery.relabel "dockerlogs" {

targets = []

rule {

source_labels = ["__meta_docker_container_name"]

regex = "/(.*)"

target_label = "service_name"

}

}

loki.source.docker "default" {

host = "unix:///var/run/docker.sock"

targets = discovery.docker.dockerlogs.targets

labels = {"platform" = "docker"}

relabel_rules = discovery.relabel.dockerlogs.rules

forward_to = [loki.write.default.receiver]

}

// !SECTION

That’s it. Now you have a running Log and Metrics collector which sends this data to SigLens.

SigLens

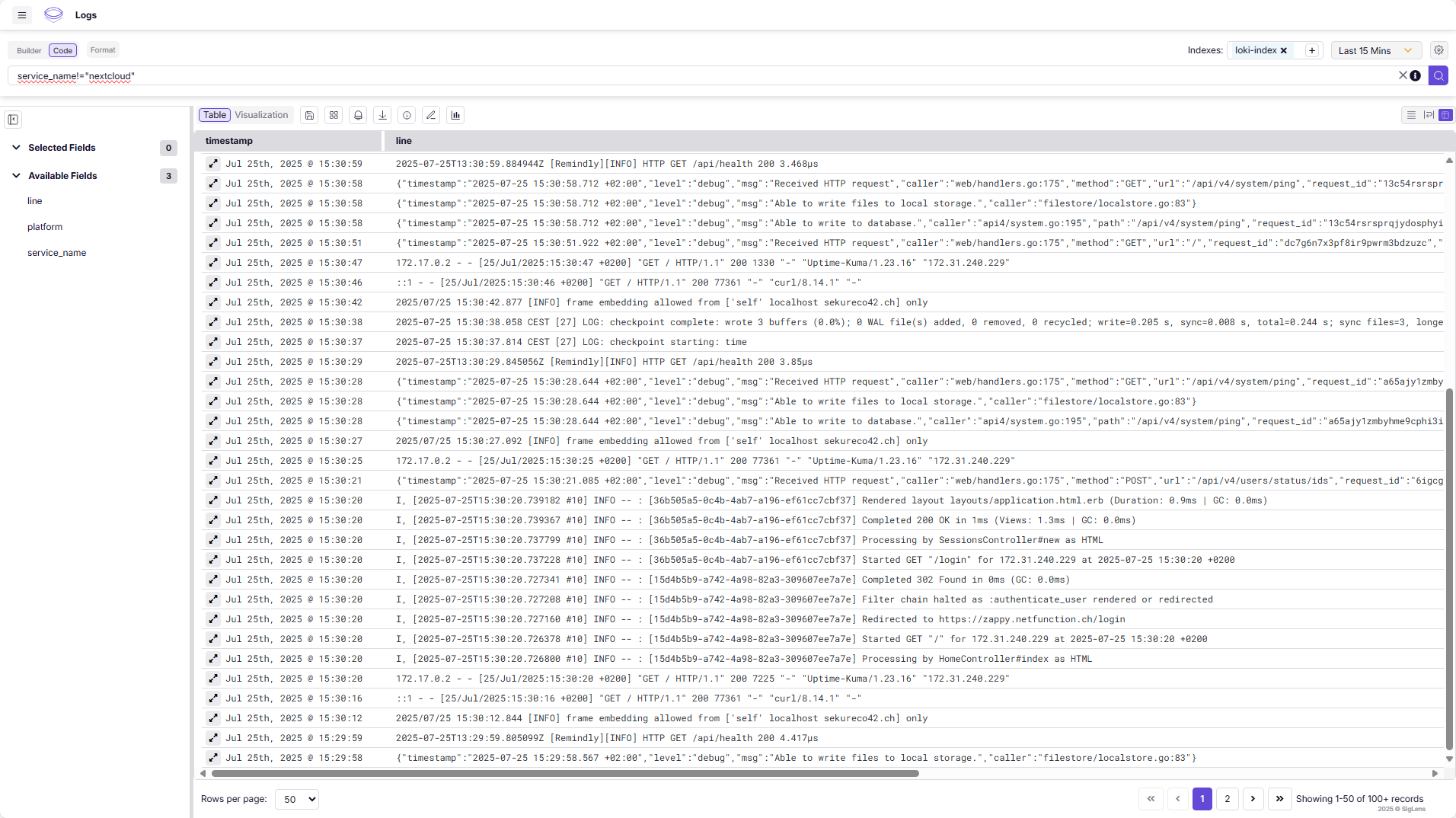

Now you are able to query for your log data in SigLens. Be aware that this setup of Grafana Alloy creates the index loki-index.

loki-index.

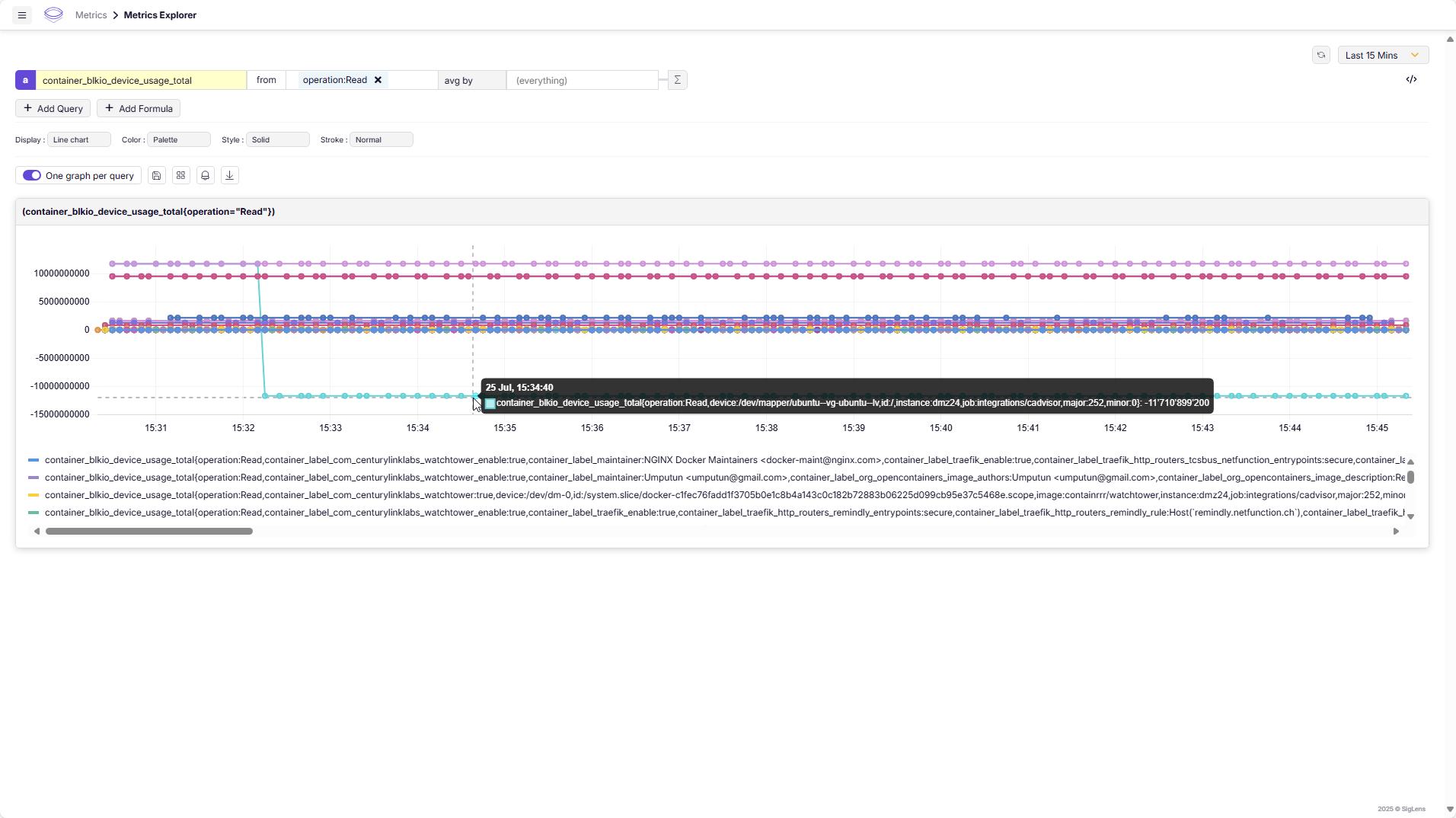

Or metrics:

Summary

Grafana Alloy is a simple replacement for vector.dev but also others. Mainly as a replacement for promtail & Co. in the Grafana/Loki world it could also be used for SigLens thanks to the API compatibility of SigLens with Loki and Prometheus. So you have the option to use Grafana Alloy as your tool to collect Logs and Metrics from your hosts and/or docker environments.

Further Reading

Here are some links:

- Grafana Alloy Log and Metric Ingestion written in golang: https://grafana.com/docs/alloy/latest/

- Boilerplate from Christian Lempa: https://github.com/ChristianLempa/boilerplates/tree/main/docker-compose/alloy

- SigLens Log Management Solution written in golang: https://siglens.com/